AUTONOMOUS SPACE AGE.

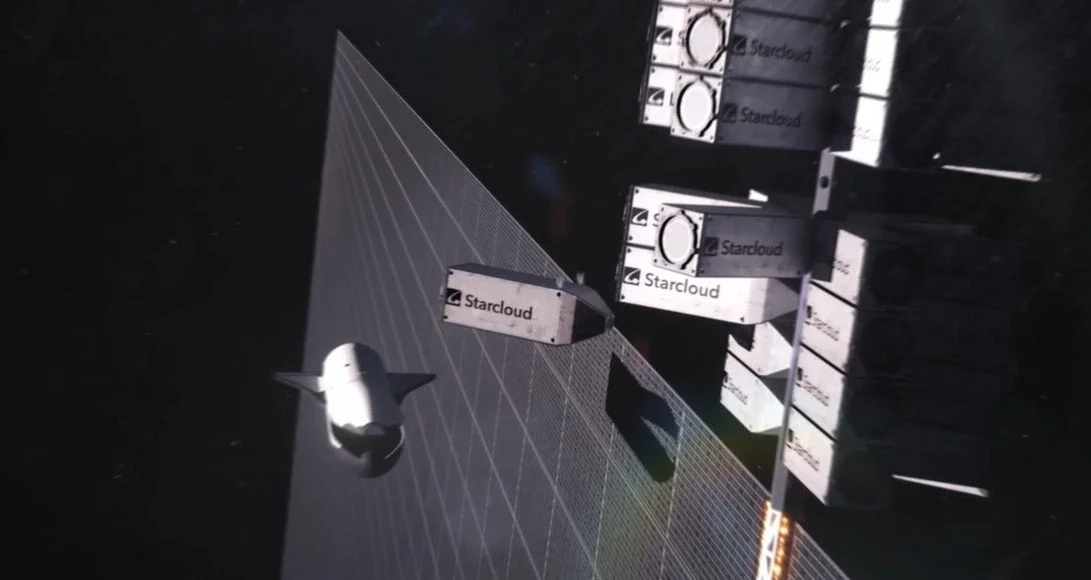

AI and Space Are Converging.Starcloud plans to build a 5-gigawatt orbital data center with super-large solar and cooling panels approximately 4 kilometers in width and length.

Over the past 10 weeks, a remarkable pattern has emerged: AI infrastructure and space infrastructure are converging on the same frontier — orbital data centers and real-time compute at the edge of Earth’s gravity well. What was once dismissed as speculative is now being actively pursued by startups and tech giants alike. This trend is reshaping not just how we think about data, but where and how that data will be generated, processed, and applied — and it directly validates why BUTTRESS and FOUNDATION are strategically positioned at the core of this new era.

The Space [ Data Centre ] Race

Multiple companies have publicly announced plans to put compute infrastructure into orbit. These range from proof-of-concept small satellites to ambitious plans for multi-gigawatt orbital data ecosystems:

Starcloud (with NVIDIA support): A satellite launching a NVIDIA H100 GPU and training a model — potentially 100× more powerful than prior space compute efforts — is slated to demonstrate state-of-the-art AI processing in orbit. NVIDIA Blog

Google’s Project Suncatcher: Engineers are designing a constellation of solar-powered satellites carrying Google AI chips, with prototype deployments targeted for 2027. Scientific American

Aetherflux’s “Galactic Brain”: A constellation of orbital data centers powered by continuous solar energy is being designed to support massive AI workloads. The Verge

Blue Origin & SpaceX: Blue Origin is developing orbital data center technology, and SpaceX’s Starlink architecture is being eyed as a foundation for future space compute payloads. Reuters

Additional players: Reports indicate companies such as Axiom Space, NTT, Ramon.Space, Sophia Space, and others are exploring space-based computing hardware. Data Center Dynamics

A recent Scientific American piece even notes that launch cost declines and uninterrupted solar power make orbit a compelling future platform for data centers, especially as terrestrial facilities face limits in power, land, and cooling capacity. Scientific American

Why Space Makes Sense for AI Compute

There are several technical pressures driving this shift:

Power Constraints on Earth: AI training and inference consume vast electrical power. Terrestrial grids struggle to keep up without massive environmental footprints.

Cooling and Density Limits: Traditional data centers rely on water-intensive cooling systems; space offers near-infinite heat rejection via radiative cooling.

Continuous Solar Power: In low Earth orbit, sunlight is available ~24/7, enabling solar power to become a primary energy source for compute.

Massively Parallel Launch Potential: With reusable launch systems and vehicles like SpaceX’s Starship, the logistical barrier to placing compute in space is falling.

BUTTRESS vs. Legacy Ground Stations

Today’s satellites rely on ground stations that downlink raw data in bursts. These legacy facilities are:

Bottlenecked by cadence: Most satellites downlink only a few passes per day at select remote sites far from users.

Latency limited: Hours to get raw data to processing centers, then hours to run inference.

Dispersed & disconnected: Data must be transferred over terrestrial networks before it can be analysed.

In contrast, BUTTRESS is architected to be adjacent to customers, orbit interconnects, and inference demand:

Near real-time downlinks: Rather than infrequent batch transfers, orbital compute protocols can deliver tokens and inference outputs continuously.

Proximity to demand: By situating compute nodes in cities, near cities and launch hubs, latency collapses and operational agility expands.

If the future of intelligence is real-time, responsive, and autonomous, then it cannot be tethered to once-a-day downlinks and long terrestrial backhaul routes. BUTTRESS delivers the connective tissue for an orbital AI ecosystem that treats low latency as a structural requirement, not a nice-to-have.

FOUNDATION: Launch Capacity for an “Airline Cadence”

One of the most striking predictions about the near future of space operations comes from SpaceX CEO Elon Musk:

“Yes. In about 6 or 7 years, there will be days where Starship launches more than 24 times in 24 hours.”

— Elon Musk Yahoo Finance

This forecast — that Starship could achieve daily launch cadences rivaling airline operations by the early 2030s — transforms the economics and logistics of orbital infrastructure. It signals a shift from rare, event-based access to space toward persistent, high-throughput launch capability.

But to support hourly, 24/7 Starship flights at scale will require:

Expanded planetary launch infrastructure

Diverse spaceport locations (to increase throughput and resilience)

Integrated urban launch hubs connected to supply chains and labor markets

Regulatory and logistical systems capable of handling frequent traffic

This is precisely the role that FOUNDATION is designed to play — expanding terrestrial and cislunar launch capacity in a way that supports the coming era of continuous orbital operations. By embedding launch infrastructure within broader transdomainal urban systems, FOUNDATION ensures that the West’s space economy is not constrained by outdated, siloed facilities.

Cities as the Substrate of the Autonomous Space Age

Taken together, these developments — orbital data centers, new launch paradigms, real-time inference requirements — imply one strategic truth:

The infrastructure of the autonomous space age will not be built on isolated ground stations and desert launch sites, but on integrated urban systems that connect Earth, orbit, and AI. We believe that this is SpaceX is building Starbase City.

Cities and spaceport cities like FOUNDATION + Starbase will host:

High-frequency command and control

Downlink and inter-orbital relay stations

AI inference hubs adjacent to aerospace clusters

Redundant, city scale compute meshes

Workforce ecosystems trained for both space and AI industries

In this picture, urban AI and Space infra is not a sci-fi curiosity — it’s the next logical step in urban evolution. Just as steam trains and factories dictated the layout of industrial cities in the 19th century and digital networks reshaped them in the 20th.

The message embedded in these orbital data-center announcements is unmistakable: the next industrial revolution will be trans-orbital, and the winners will be the societies that fuse their cities, compute, and launch infrastructure early. Legacy ground-station architectures cannot support real-time AI, and legacy spaceports cannot support airline-cadence Starship operations. The West needs a new infrastructure layer. BUTTRESS supplies the intelligence grid; FOUNDATION supplies the launch grid. Taken together, they form the physical and orbital spine of a Western-led autonomous space economy — the infrastructures on which prosperity, security, and strategic advantage will depend.

Links:

Aetherflux “Galactic Brain” project (orbital data centers for AI) The Verge

Blue Origin & SpaceX working on orbital data center tech Reuters

Google’s Project Suncatcher orbital data initiatives Scientific American

Starcloud’s space-based data center plans with NVIDIA GPUs NVIDIA Blog

Elon Musk predicts >24 Starship launches per day in ~6–7 years Yahoo Finance

![ROMULLUS | [Sci-Fi] Infrastructure for the West and Her Allies](http://images.squarespace-cdn.com/content/v1/65b3c1f0a4d6295a6ebd4b19/d4a8aeab-602b-41b7-b59f-958880d76a5b/ROMULLUS-61.png?format=1500w)